About me

I am a third-year PhD student at Stanford University, co-advised by Prof. Leonidas Guibas and Prof. Gordon Wetzstein. My research was generously supported by the Qualcomm Innovation Fellowship.

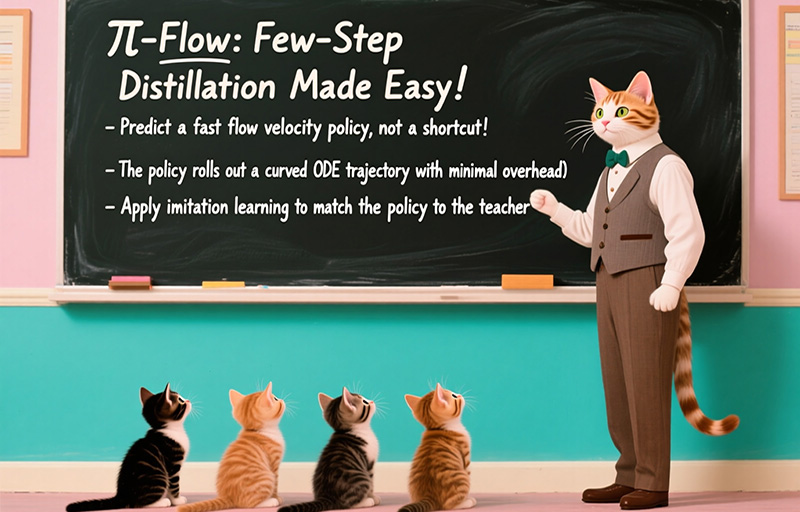

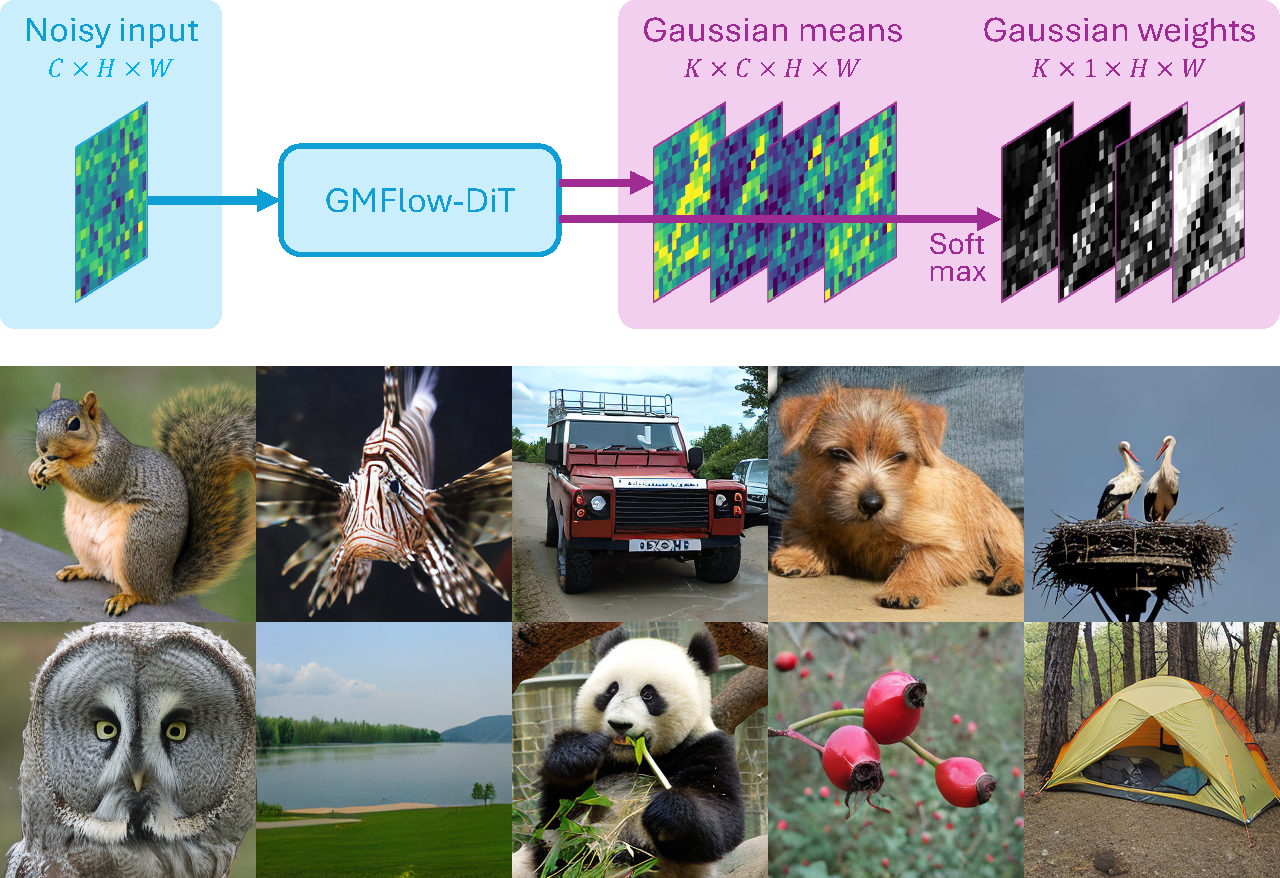

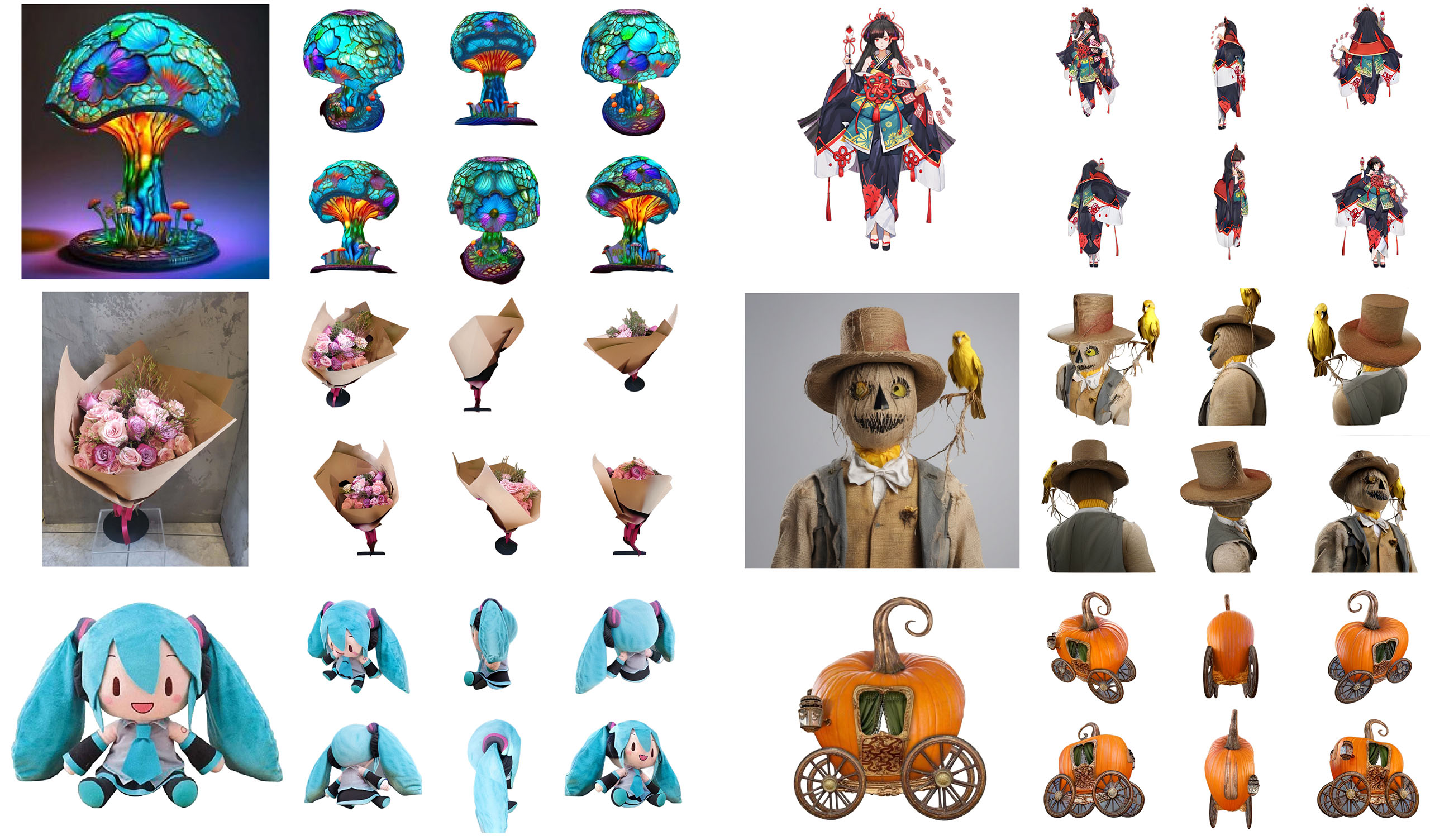

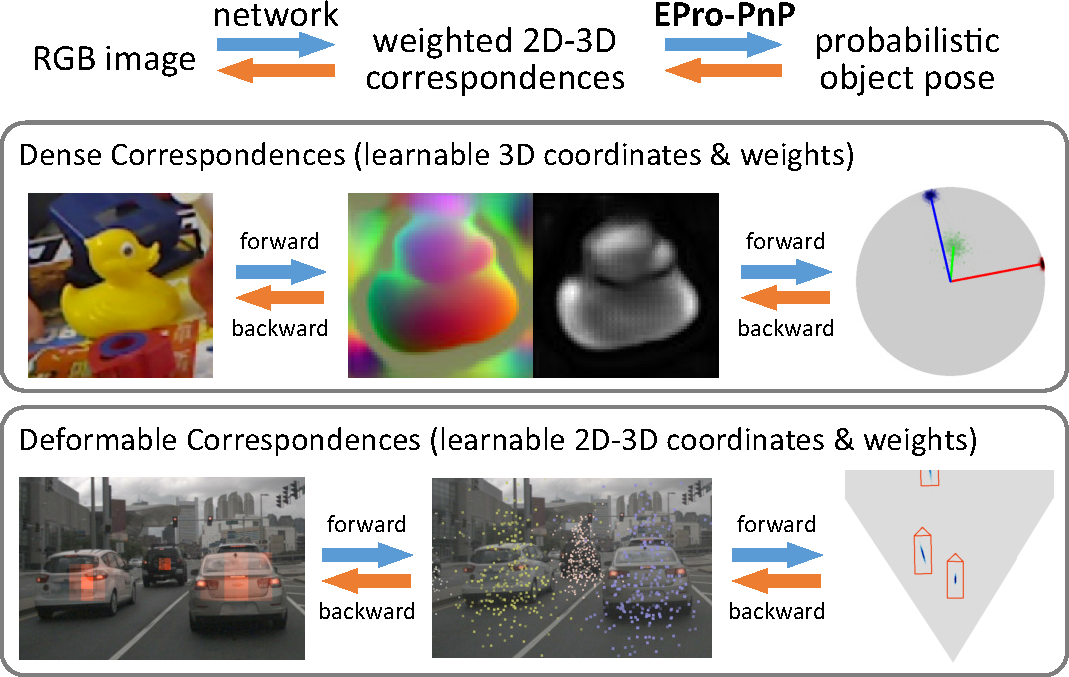

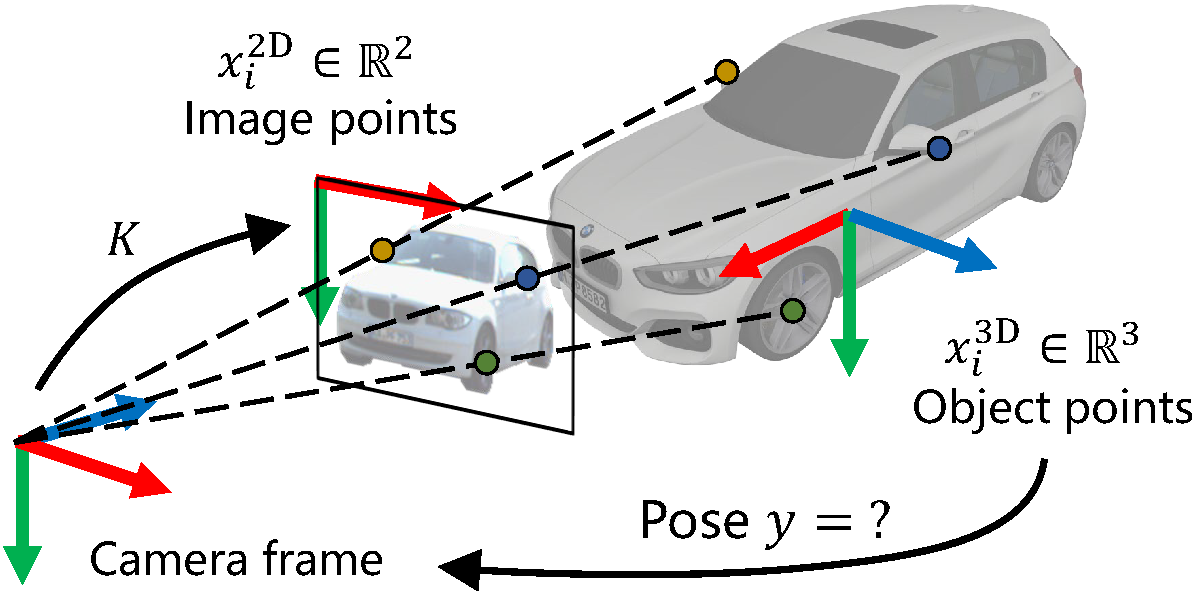

I am interested in the fundamentals of generative models, with a current focus on diffusion and flow-based models. Previously, I worked on 3D generation and pose estimation, and my work EPro-PnP was awarded the CVPR 2022 Best Student Paper.